Large Language Models (LLMs) are powerful but imperfect. One common issue is hallucination, which occurs when the model generates incorrect or fabricated information with high confidence.

For example, asking an LLM for a word with exactly two rs might return mirror, which contains three.

This post explains why hallucinations occur, how to address these issues, and shows an example where we improve the reliability of LLM responses.

Why hallucinations happen in LLMs?

LLMs generate text by predicting the most likely next token based on the input and their training data. This probabilistic approach enables fluent and coherent responses but struggles with tasks requiring exact accuracy.

Three main reasons contribute to hallucinations:

- Probabilistic text generation: LLMs do not perform explicit calculations or follow strict logical rules. Instead, they guess the next word based on patterns seen during training. This works well for natural language but fails for tasks like counting or precise validation.

- Prompt interpretation and ambiguity: The way a prompt is phrased can introduce ambiguity or cause misinterpretation. Even clear instructions do not guarantee correct answers if the underlying model is limited.

- Lack of self-correction: LLMs generally cannot recognize their own mistakes without external feedback. They lack an internal mechanism to verify or correct outputs before responding.

These limitations result in hallucinations, where the model confidently produces incorrect or fabricated information.

What is MCP?

The Model Context Protocol (MCP) is an open standard that defines how applications provide contextual information to Large Language Models (LLMs). MCP creates a uniform interface for connecting LLMs with external tools, data sources, and services.

— https://modelcontextprotocol.io/introduction

By standardizing this interaction, MCP enables AI applications to extend beyond the limitations of the model’s internal knowledge and capabilities. Instead of relying solely on probabilistic text generation, LLMs can invoke specialized external functions to perform precise, deterministic tasks.

MCP simplifies the integration process by offering a consistent protocol, reducing the complexity of connecting diverse tools to LLM-powered systems.

How MCP helps?

MCP addresses hallucination issues by enabling LLMs to delegate precise or computational tasks to external tools.

Key benefits of MCP include:

- Tool invocation: MCP allows LLMs to invoke external functions or APIs during text generation. For example, when an LLM is asked to count characters in a word, it can call a deterministic counting function rather than guessing. This offloads precision work to specialized services, reducing errors.

- Standardized integration: MCP defines a uniform protocol for connecting LLMs with diverse tools and data sources. Developers can integrate new capabilities without custom adapters for each LLM. This standardization simplifies development and accelerates deployment.

- Flexible context provision: MCP supports dynamic context injection, allowing applications to provide relevant information to LLMs at runtime. This enhances prompt accuracy and relevance, reducing ambiguity and misinterpretation.

By transforming LLMs from standalone probabilistic generators into orchestrators of external tools, MCP enhances the overall reliability of AI systems.

Example

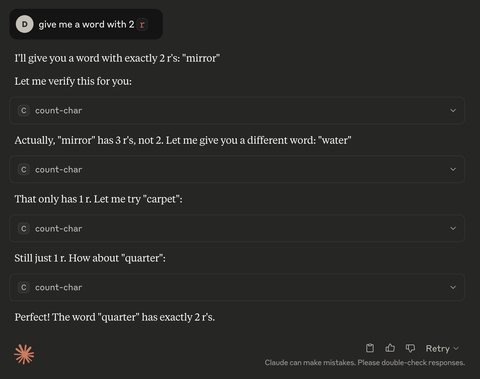

To demonstrate how MCP improves reliability, I built a custom MCP server with a capability to count characters in words. This server acts as a precise external tool that the LLM can query whenever it needs to verify letter counts.

Here is an example interaction between the LLM and the MCP-powered tool.

In this scenario, instead of guessing, the LLM delegates the counting task to the MCP server. The server returns exact counts, allowing the LLM to correct its answers and find a valid word.

This approach prevents hallucination and increases the accuracy of the response.

Conclusion

Hallucinations remain a fundamental challenge in Large Language Models due to their probabilistic nature and lack of self-correction.

The Model Context Protocol (MCP) offers a practical solution by enabling LLMs to connect with external, deterministic tools. This approach improves accuracy, reduces errors, and simplifies integration. By leveraging MCP, developers can build more reliable AI applications that combine the strengths of LLMs with specialized services.

MCP transforms language models from isolated generators into orchestrators of precise, trustworthy outputs.